This article will give you an overview of how to automate the deep learning model hyper-parameter tuning.

Article Outline

- Introduction

- About Dataset

- Loading Dataset

- Data Preprocessing

- Setting Model Configuration

- Model Tuning Strategy

- Identifying the best model parameters

- Retraining with best parameters

- Retrieving mean and standard deviation of CV score

- Tutorial Code

Introduction

Currently, deep learning is being used in solving a variety of problems, such as image recognition, object detection, text classification, speech recognition (natural language processing), sequence prediction, neural style transfer, text generation, image reconstruction and many more.

It is the technology used behind self-driving cars, speech recognition used in Siri, Alexa or Google, photo tagging on Facebook, song recommendation on Spotify and product recommendation engines. Now even researches are using deep learning to understand complex patterns in data, for example detecting glaucoma in diabetes patients, disaster management (earthquake and flood predictions), new material development, fake news detection, robotics and biomechanics. For better understanding the practical application of deep learning, I will recommend you to watch the YouTube Series “The Age of A.I.”.

There are many tools available to train a deep neural network. For research work, researchers use programming language and libraries/packages to implement such complex models, as it provides more flexibility and one can modify the model as per work requirement. Nowadays training a deep neural network is very easy, thanks to François Chollet fordeveloping Keras deep learning library. Using Keras, one can implement a deep neural network model with few lines of code.

The problem starts when as a researcher you need to find out the best set of hyperparameters that gives you the most accurate model/solution. Manually trying each set of parameters could be very time consuming and exhausting. Here, KerasRegressor class, which act as a wrapper ofscikit-learn’s library in Keras comes as a handy tool for automating the tuning process.

In this article, we will learn step by step, how to tune a Keras deep learning regression model and identify the best set of hyperparameters. Same can be applied for the classification model.

About Dataset

I have a Transportation Engineering (Civil Engineering Domain) background. During my civil engineering Diploma, B.Tech and M.Tech I had performed the Concrete’s Characteristics Compressive Strength test in a laboratory setting. Thus, I thought it would be interesting to model the concrete’s compressive strength using a deep learning model.

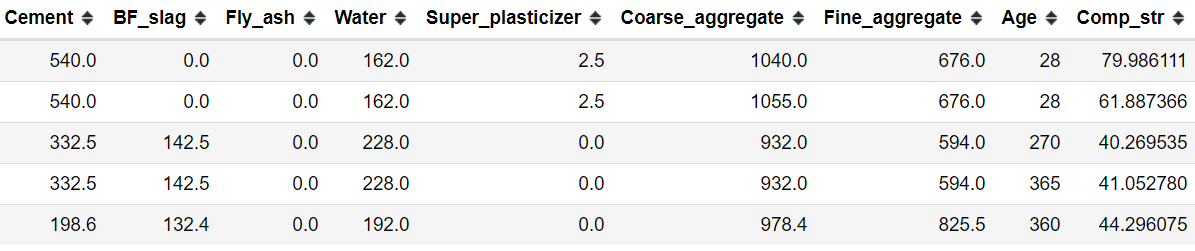

Hence, in this article, we are going to use the concrete dataset [1] obtained from the UCI Machine Learning library.

The dataset includes the following variables, which are the ingredients for making durable high strength concrete.

I1: Cement (C1): kg in a m3 mixture

I2: Blast Furnace Slag (C2): kg in a m3 mixture

I3: Fly Ash (C3): kg in a m3 mixture

I4: Water (C4): kg in a m3 mixture

I5: Superplasticizer (C5): kg in a m3 mixture

I6: Coarse Aggregate (C6): kg in a m3 mixture

I7: Fine Aggregate (C7): kg in a m3 mixture

I8: Age: Day (1~365)

O1: Concrete compressive strength: MPa

Where I: Input; O: Output, C: Component; m3: meter cube and MPa: Megapascal.

Before proceeding to the data analysis part, let’s get familiar with the different inputs of the concrete dataset.

Concrete

Concrete is comprised of three basic components: water, aggregate (rock, sand, or gravel) and cement. Cement acts as a binding agent when mixed with water and aggregates.

Compressive Strength

Compressive strength is one of the vital parameters that determine the performance as a construction material. A concrete mix designed to get the required performance and durability for a given construction work/project. The compressive strength of concrete is determined in laboratories in order to maintain the desired quality of concrete during casting. The compressive strength is calculated by dividing the failure load with the area of application of load, usually after 28 days (I8: Age) of the curing period. Though researchers also report strength after 7, 14 and 21 days of curing period. The strength of concrete is achieved by controlling the proportion of cement (C1), fine (C7) and coarse (C6) aggregates, water, and various admixtures. The characteristic compressive strength of concrete fc/ fck is usually reported in MPa (O1). For normal Construction, the characteristic compressive strength can vary from 10 to 60 MPa; while for a certain structure the requirement can go beyond 600 MPa.

Admixture

Nowadays, researchers are using different admixtures to get desired property; the fly ash (C3) is one of them. The fly ash act as an admixture in concrete mixes, which is a pozzolan substance containing aluminous and siliceous material; when mixed with lime and water, forms a compound similar to cement. Fly ash is mixed in concrete as an admixture to improve workability and to reduce permeability and bleeding.

Similarly, the ground granulated blast furnace slag (C2), a mineral admixture is added in concrete to improves its properties such as workability, strength and durability.

Superplasticizers

Superplasticizers (high range water reducers) are used in concrete mixes for making high strength durable concrete. Superplasticizers (C5) are water-soluble organic substances that reduce the amount of water require to achieve certain stability of concrete, reduce the water-cement ratio, reduce cement content and increase slump. Use of superplasticizers reduces the water requirementup to 30% without losing workability.

Aim

The aim of the modelling is to predict the characteristic compressive strength of concrete (regression problem) based on the given input components (cement, blast furnace slag, fly ash, water, superplasticizers, coarse and fine aggregates, and Age).

Here, we will try to find out the best set of hyperparameters that minimizes the loss function to the maximum extend. In other words, we will look for the parameter set that provides the most accurate solution.

Loading relevant libraries

The very first step is to load relevant python libraries

import numpy as np #for array manipulation

import pandas as pd #data manipulation

from sklearn import preprocessing #scaling

import keras

from keras.layers import Dense #for Dense layers

from keras.layers import BatchNormalization #for batch normalization

from keras.layers import Dropout #for random dropout

from keras.models import Sequential #for sequential implementation

from keras.optimizers import Adam #for adam optimizer

from keras import regularizers #for l2 regularization

from keras.wrappers.scikit_learn import KerasRegressor

from sklearn.model_selection import RandomizedSearchCV

from sklearn.model_selection import KFold

from sklearn.model_selection import cross_val_scoreLoading dataset

The next step is to load the data from an excel sheet from your local storage and performing basic exploratory data analysis.

concrete = pd.read_excel('Concrete_Data.xlsx')

concrete.head()

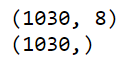

Defining input and target data

The next step is to assign the input columns (components) to train_inputs, and output/target column to train_targets variable. We need to convert the data to a NumPy array using .values method before feeding into the neural network model. The dataset includes 1030 observations and 8 columns.

train_inputs = concrete.drop("Comp_str", axis = 1).values

train_targets = concrete["Comp_str"].values

print(train_inputs.shape)

print(train_targets.shape)

Data Preprocessing

Standardization of datasets is a common requirement for many machine learning estimators; else they might behave badly if the individual features do not more or less look like standard normally distributed data: Gaussian with zero mean and unit variance. So, the next step is to scale data so that it has zero mean and unit variance.

train_inputs = preprocessing.scale(train_inputs)Setting Model Configuration

To perform hyperparameter tuning the first step is to define a function comprised of the model layout of your deep neural network. Here, is the step by step guide for defining the function named create_model.

Step1: The very first step is to define a function create_model where we have initiated default arguments learning_rate = 0.01, and activation function “relu”. Don’t worry these are default and later we will tweak them for tuning purposes.

def create_model(learning_rate = 0.01, activation = 'relu')Step2: The next step is to set our optimizer. Here we have selected Adam optimizer and initiated with our default argument learning rate value.

# Use Adam optimizer with the given learning rate

opt = Adam(lr = learning_rate)Step3: The first layer always needs an input shape. Here, the input shape is the number of columns in the training dataset. We extracted the number of columns input using the .shape method and indexing the second value.

n_cols = train_inputs.shape[1]

input_shape = (n_cols, )Step4: The next step is to define the sequential layout of your model. Here, we used two dense layers of 128 hidden neurons. The activation is set to the default argument i.e. “relu” and we also set an l2 regularization to penalize large weights and to improve representation learning. To make the representation learning more robust we added Dropout layer that drops 50% of the connections randomly.

# Create your binary classification model

model = Sequential()

model.add(Dense(128,

activation = activation,

input_shape = input_shape,

activity_regularizer = regularizers.l2(1e-5)))

model.add(Dropout(0.50))

model.add(Dense(128,

activation = activation,

activity_regularizer = regularizers.l2(1e-5)))

model.add(Dropout(0.50))

model.add(Dense(1, activation = activation))Step5: The next step is to compile the model. For compilation, we need an optimizer and a loss function. Here we have opted for the Adam optimizer and as this is a regression task hence we opted for “mean_absolute_error” loss function. We choose mae as it is more robust to outlier than mse. To keep track of the other errors we set other two metrics which are mean absolute error (mse) and mean absolute percentage error (mape).

# Compile the model

model.compile(optimizer = opt,

loss = "mean_absolute_error",

metrics=['mse', "mape"])

return modelHere is the overall blueprint of model configuration:

n_cols = train_inputs.shape[1]

input_shape = (n_cols, )

# Creates a model given an activation and learning rate

def create_model(learning_rate = 0.01, activation = 'relu'):

# Create an Adam optimizer with the given learning rate

opt = Adam(lr=learning_rate)

# Create your binary classification model

model = Sequential()

model.add(Dense(128,

activation = activation,

input_shape = input_shape,

activity_regularizer = regularizers.l2(1e-5)))

model.add(Dropout(0.50))

model.add(Dense(128,

activation = activation,

activity_regularizer = regularizers.l2(1e-5)))

model.add(Dropout(0.50))

model.add(Dense(1, activation = activation))

# Compile the model

model.compile(optimizer = opt,

loss = "mean_absolute_error",

metrics=['mse', "mape"])

return modelDefining Model Tuning Strategy

The next step is to set the layout for hyperparameter tuning.

Step1: The first step is to create a model object using KerasRegressor from keras.wrappers.scikit_learn by passing the create_model function.We set verbose = 0 to stop showing the model training logs. Similarly, one can use KerasClassifier for tuning a classification model.

# Create a KerasRegressor

model = KerasRegressor(build_fn = create_model,

verbose = 0)tep2: Next step is to define the hyperparameter search space. Here, we will try the following common hyperparameters:

activation function: relu and tanh

batch size: 16, 32 and 64

epochs: 50 and 100

learning rate: 0.01, 0.001 and 0.0001

# Define the parameters to try out

params = {'activation': ["relu", "tanh"],

'batch_size': [16, 32, 64],

'epochs': [50, 100],

'learning_rate': [0.01, 0.001, 0.0001]}Step3: Next we will perform a randomized cross-validation search across the parameter space using RandomizedSearchCV function. We selected the randomized search as it works faster than a grid search. Here, we will perform a 10 fold cross-validation search. For smaller datasets, creating a separate validation dataset costs training data thus, in such scenarios cross-validation technique could be a better model training approach.

random_search = RandomizedSearchCV(model,

param_distributions = params,

cv = KFold(10))Step4: Next, we will fit the model to our train_inputs and train_targets.

random_search_results = random_search.fit(train_inputs, train_targets)Here, is the blueprint of overall model tuning layout.

# Create a KerasClassifier object

model = KerasRegressor(build_fn = create_model,

verbose = 0)

# Define the hyperparameter space

params = {'activation': ["relu", "tanh"],

'batch_size': [16, 32, 64],

'epochs': [50, 100],

'learning_rate': [0.01, 0.001, 0.0001]}

# Create a randomize search cv object

random_search = RandomizedSearchCV(model,

param_distributions = params,

cv = KFold(10))

random_search_results = random_search.fit(train_inputs, train_targets)Identifying best parameters

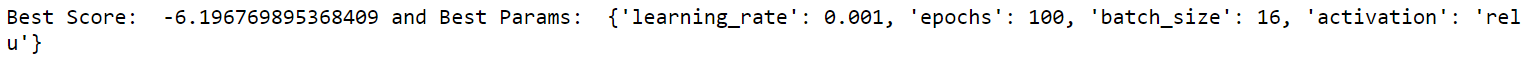

The model with the best parameters has achieved a Mean Absolute Error (MAE) of 6.197 (approx.). The best model performance is achieved with a learning rate of 0.001, epochs size of 100, batch_size of 16 and with a relu activation function.

print("Best Score: ",

random_search_results.best_score_,

"and Best Params: ",

random_search_results.best_params_)

Why negative score

The actual MAE is simply the positive version of the number we’re getting.

The unified scoring API always maximizes the score, so scores which need to be minimized are negated in order for the unified scoring API to work correctly. The score that is returned is therefore negated when it is a score that should be minimized and left positive if it is a score that should be maximized. You can read more about this here.

Re-evaluating Model with the Best Parameter Set

The next task is to refit the model with the best parameters i.e., learning rate of 0.001, epochs size of 100, batch_size of 16 and with a relu activation function. Here, we compute the mean and standard deviation of the 10-fold cross-validation score to see the variation in output loss.

n_cols = train_inputs.shape[1]

input_shape = (n_cols, )

# Create the model object with default arguments

def create_model(learning_rate = 0.001, activation='relu'):

# Set Adam optimizer with the given learning rate

opt = Adam(lr = learning_rate)

# Create your binary classification model

model = Sequential()

model.add(Dense(128,

activation = activation,

input_shape = input_shape,

activity_regularizer = regularizers.l2(1e-5)))

model.add(Dropout(0.50))

model.add(Dense(128,

activation = activation,

activity_regularizer = regularizers.l2(1e-5)))

model.add(Dropout(0.50))

model.add(Dense(1, activation = activation))

# Compile the model

model.compile(optimizer = opt,

loss = "mean_absolute_error",

metrics = ['mse', "mape"])

return modelRetrieving mean K-Fold score and standard deviation

Here, we again use the KerasRegressor to create the model object and calculate the accuracy score for each fold using the cross_val_score( ) function.

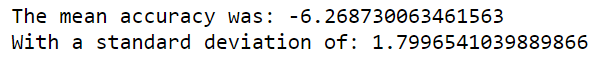

The result revealed that with the best parameters, the 10-fold CV model has achieved a mean value of Mean Absolute Error (MAE) of 6.269 (approx.) and a standard deviation of 1.799.

Create a KerasClassifier

model = KerasRegressor(build_fn = create_model,

epochs = 100,

batch_size = 16,

verbose = 0)

# Calculate the accuracy score for each fold

kfolds = cross_val_score(model,

train_inputs,

train_targets,

cv = 10)

# Print the mean accuracy

print('The mean accuracy was:', kfolds.mean())

# Print the accuracy standard deviation

print('With a standard deviation of:', kfolds.std())

Now, you have an approximate idea of the best set of parameters that would give you the most accurate solution. Next, you can use this set of hyperparameters to train a model and test on the unseen dataset to see whether the model generalizes on the unseen dataset.

Note

Keras based hyperparameter search is very very resource and time-consuming. This model training took more than 1 hour in my local machine (i7, 16 GB RAM), even after using NVIDIA GPU. The hyperparameter tuning froze my PC several times. So, keep patience while tuning this type of large computationally expensive models. It is better to perform such task using cloud-based services.

I hope you learned something new from this blog.

Image Credit: Photo by Muukii on Unsplash

References

[1] I-Cheng Yeh, “Modeling of the strength of high-performance concrete using artificial neural networks,” Cement and Concrete Research, Vol. 28, №12, pp. 1797–1808 (1998).