Every type of research require data. The data could be primary data (collected by you) or secondary data (collected by someone). In the research process, it is always recommended to first build your hypothesis (what are you expecting from the data?), and then plan and collect it. Once you have data, then the interesting part comes, which is the most vital one, i.e., preparing the data for analysis (cleaning and wrangling). It is typically called the data engineering in data science. In addition, you require some techniques which are essential for extracting vital information from the datasets, and this technique is called statistical analysis.

From past, the whole process was carried out by researchers using traditional software programs. I’m a transportation researcher and I have used statistical analytics tools like Excel, SPSS, Stata, Minitab and so on. These software programs are good, and I’m still using it, but they are expensive and not flexible to work with. Here comes the open-source programming languages that bridged these gaps. Currently, one can utilise a data science friendly programming language like R, Python and Julia to analyse and retrieve meaningful results from data. They are absolutely free, fast, user-friendly and most importantly reproducible, which is most important for research work.

As a transportation engineering PhD student, I have to perform numerous data analysis (statistical analysis) on collected datasets to retrieve meaningful results. I’m learning data science since 2017. Since then, I have read numerous books and completed numerous courses in various topics. I have gone through many ups and downs in the learning process. After almost 3.5 years of learning, I thought I should share the learning steps one should take if he/she starting it from scratch in the year of 2022. Based on my experience here (through this blog) I will share the books and courses you need to go through that will give you a hands-on experience on the topic called “Data Science”.

If I have to start it from the beginning, I will definitely start with “Python” (as per my opinion).

Why…???? My answer will be “it is easy to learn”.

First, to dive into data science, one needs to have a data-crunching tool like Python. Let’s begin the journey……..

1. A Whirlwind Tour of Python

“A Whirlwind Tour of Python” is the best book I came across to learn and revise the basics of python programming language. The book contains parts that you require to be proficient in Python programming language. This book will help you to grasp python’s basic concepts which includes variables & objects, operations, built in data types & data structures, control flow (if, elif, else, loops and so on), functions, and error & exception handling. It also covers some higher level concepts that you will need later (during data analysis), like iterators, list comprehensions, generators, modules & packages, and string manipulation (regular expressions).

2. 100 Days of Code: The Complete Python Pro Bootcamp for 2022

Udemy Course Link: : 100 Days of Code: The Complete Python Pro Bootcamp for 2022

Once you have basic understanding of the python language and its syntax, then you can go for the following course on Udemy, “100 Days of Code: The complete Python Pro Bootcamp for 2022”. I am recommending this course because of Dr. Angela’s wonderful teaching style and carefully curated projects. This course offers python beginners to advance modules. It will give you confidence and help you learn how to structurally build intuition for a problem to solve it. This course also teach you about the python Object-Oriented Programming (OOP) and how to use it for solving large projects (💡 like building a game).

3. Data Structures & Algorithms — Python

Udemy Course Link: : Data Structures & Algorithms — Python

Initially, when I had started learning Python and Data Science, then I had that notion that Data Structures and Algorithms are for software engineers. But over the year I realized that it is also important for Data Scientists and Analysts. Because it teaches, how to think about a problem to solve it step by step using optimized codes.

I have gone through various courses, but I liked the most, that teaches DS and Algorithms using visual and animated graphics. The course “Data Structures & Algorithms — Python” will give you a wonderful visual and code-based introduction to various Data Structures and Algorithms using Python.

4. The Git & Github Bootcamp

Udemy Course Link: The Git & Github Bootcamp

Before entering the world of data science, I will recommend learning the git and GitHub (version control). This will give you an extensive understanding of how large projects are developed in a collaborative manner. The course “The Git & Github Bootcamp” by Colt Steele will help you learn how to track projects, how to monitor changes, and how to get back to a previous change. In one sentence, “git is a powerful tool”.

The course will teach you how Git works behind the scenes, Git workflow (adding & committing), git merging and resolving merge conflicts. It also covers advanced topics like local and remote repositories, squash, clean up, rewrite history, writing Git aliases, hosting static websites, working with git branches, undo changes (using git restore, git revert, git reset), and workflows (pull requests, “fork & clone”, etc.).

5. Pandas 1.x Cookbook

Once you get familiar with the python programming language, its syntax and git, the next step will be to enter the world of data science. It is recommended to start with the book called “Pandas 1.x Cookbook”. The book will teach you about the Pandas (a data manipulation library). It will teach you how to load datasets, clean data, impute missing values, retrieve a meaningful result from datasets. In one word, it is the book that you need to clean and prepare data.

This book covers the concept of DataFrame, exploratory analysis, data subset selection, filtering rows, groupby and aggregation, restructuring data into a Tidy form, and combining pandas objects. Further, it also covers the Date and Time data manipulation (for time series analysis), data visualisation (using matplotlib, pandas and seaborn libraries), and debugging and testing.

6. Google Sheets — The Comprehensive Masterclass

Udemy Course Link: Google Sheets — The Comprehensive Masterclass

Even though learning python libraries is good enough for data analysis, still I believe you should learn at least one spreadsheet which will help you shape your skills further. I will recommend learning “google sheets”, which is free, and the cloud-based integration and sharing makes it outstanding for collaborative work. The course “Google Sheets — The Comprehensive Masterclass” by Leila Gharaniis one of the best courses available on the internet.

This course covers Sorting, Filtering, creating filter views and cleaning data. Additionally, it will give you a hands-on detailed overview of different functionalities such as VLOOKUP, INDEX & MATCH, FILTER & SORTN and aggregation functions (SUM, COUNT, SUMIFS, COUNTIFS). Further, it also teaches advanced topics like Pivot tables, Charts & Slicers, macros & Google Apps Script.

7. Complete Course on Data Visualization, Matplotlib and Python

Udemy Course Link: Complete Course on Data Visualization, Matplotlib and Python

Once you understand how to manipulate data (prepare data for analysis), the next step will be to get command of the basic python visualisation libraries. The “Complete Course on Data Visualization, Matplotlib and Python” course will give you an extensive overview of Matplotlib and Seaborn python visualisation libraries. This course will teach you the intuition of how matplotlib built different types of plots from scratch.

The course covers basic to advance line plot, bar plot, heatmaps, box plot, violin plot, grid plot, pair plot, faceted plots using catplot and linear model plots. Further, it teaches, how to fine-tune different components of a plot to make it publication ready. Once you learn these libraries, you can build almost any plot to present useful insights.

8. The Hitchhicker’s Guide to Plotnine

Once you get the idea of matplotlib and seaborn, the next step is to get familiar with a low code standard library which will help you generate publication ready statistical plots with few lines of code. The plotnine library is the python implementation of popular library “ggplot2”. The ggplot2 is a plotting library created by Hadley Wickham for the “R programming language”. The plotting syntax is based on the concept of grammar of graphics.

The book, “The Hitchhicker’s Guide to Plotnine”, provides a systematic step by step syntax for plotting a variety of plots. It covers line plot, area plot, bar plot, stacked bar plot, scatter plot, weighted scatter plot, histogram, density plot, function plot, box plot, linear regression plot and lowess plot.

9. Map Academy: Get mapping quickly, with QGIS

Udemy Course Link: Map Academy: Get mapping quickly, with QGIS

Next, you should add the geospatial data visualization capabilities in your bucket list. It will help you present data on maps and help you understand the spatial variabilities across regions and countries. Like transportation demand over various metro stations, or COVID-19 spread and recovery, or impact of natural disaster across states. For this, I will recommend the course “Map Academy: Get mapping quickly, with QGIS”, which will step by step teach you the open-source mapping and analytics software tool called QGIS. This course will give you hands-on experience using different real-world projects.

10. Master statistics & machine learning: intuition, math, code

Udemy Course Link: Master statistics & machine learning: intuition, math, code

Once you get the idea about data manipulation and visualisation, then you are ready to jump into the world of statistics. This will help you retrieve meaningful results from data and pave the path for statistical modeling, as it is the backbone of most machine learning algorithms. The course “Master statistics & machine learning: intuition, math, code” by Mike X Cohen is one of the hidden gems, which will give you the idea behind different statistical approaches used today for data analysis.

It will teach you about different visualisations, descriptive statistics, inferential statistics, data normalisation and outliers, probability theories, hypothesis testing, T-tests, confidence intervals, correlation, ANOVA, regression, statistical power and sample size calculation, clustering and dimension reduction all using Python.

In addition, I will also recommend visiting these great YouTube channels to learn/revise statistical concepts.

11. Practical Statistics for Data Scientists

Once you get the fundamental ideas behind different statistical concepts, then you are ready to know, how real life data scientists use statistics for solving real-world problems. What concepts are important for data science problem-solving, and which one you need to know in detail. The book “Practical Statistics for Data Scientists” gives you a hands-on experience on practical statistics for solving real-world problems.

The book covers exploratory analysis, data sampling distributions, statistical elements and significance testing, regression and classification models, and their functionalities behind the scene, and various statistical supervised and unsupervised machine learning algorithms.

12. Bayesian Statistics the fun way

As you are familiar with the statistical concepts used in data science; now it is time to learn some advanced concepts. It is time to think and analyse data in a Bayesian prospective. I will 100% recommend the book “Bayesian Statistics the fun way” which will give you the teste of Bayesian statistics. This book starts with basic statistics and proceed step by step towards the interesting part, like solving problems in Bayesian way. This book would motivate you to think different problems in Bayesian way. It presents various examples from Star Wars, which makes the learning process fun and fascinating. Finally, it will lay the foundation for Bayesian A/B testing for solving real-world problems.

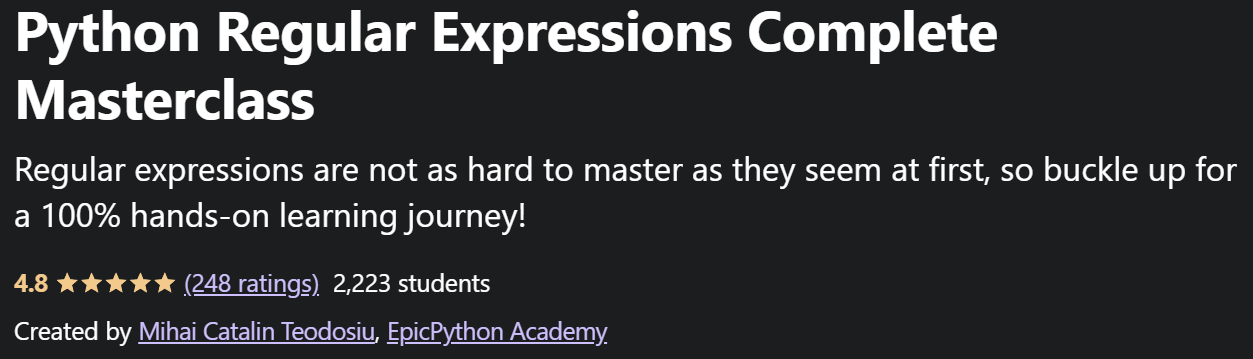

13. Python Regular Expressions Complete Masterclass

Udemy Course Link: Python Regular Expressions Complete Masterclass

Next, I will recommend everyone to learn the pattern matching concept using regular expressions that will help you to identify patterns in your data. It is especially useful for detecting patterns in text data and will help you clean your messy data. Pandas support regular expression, which will give you additional advantage.

The course “Python Regular Expressions Complete Masterclass” by Mihai Catalin Teodosiu is one of the best courses available on the internet that teach you almost everything on regular expressions. It covers methods and objects, metacharacters & special sequence, extension notation & assertion, project on validating user account details, and how to use it for Excel files and for filtering data scrapped from HTML pages.

14. The Ultimate MySQL Bootcamp: Go from SQL Beginners to Expert

Udemy Course Link: The Ultimate MySQL Bootcamp: Go from SQL Beginner to Expert

Companies store their day to day generated data in databases. As a data analyst/scientist, you have to query data from databases to perform data analytics and to retrieve meaningful results that will drive the business decisions. Thus, you need to learn at least one database. My recommendation will be to start with MySQL, which is matured, stable and very popular.

I would recommend going for the course “The Ultimate MySQL Bootcamp: Go from SQL Beginners to Expert” by Colt Steele and lan Schoonover. The course teaches generating databases and tables, data insertion, CRUD (Create, Read, Update, Delete) commands, string functions, selection, aggregation, data types, logical operations and joining (one to many, and many to many). It also contains projects that will give you a hands-on experience on MySQL.

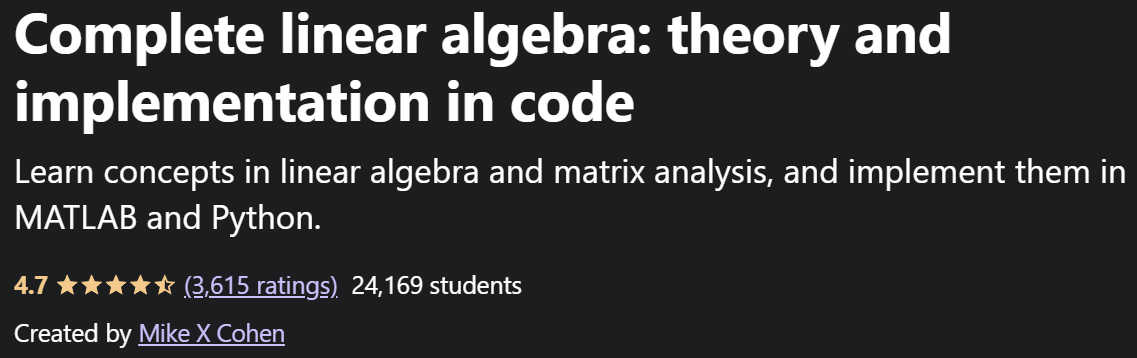

15. Complete linear algebra: theory and implementation in code

Udemy Course Link: Complete linear algebra: theory and implementation in code

Before you enter the world of machine learning, you must know the basics of linear algebra, if you really want to understand the underlying concepts and want to implement them from scratch. I would recommend the course “Complete linear algebra: theory and implementation in code” by Mike X Cohen.

The course covers a wide variety of topics like theoretical concepts in linear algebra, proofs, application of linear algebra on real-world datasets, applying and solving linear algebra problems using Python (vectors and matrix) and the maths underlying most of AI (artificial intelligence) concepts.

16. Python Feature Engineering

Before you jump into the machine learning part, you should have the idea about what format of data is acceptable for model building. How to extract relevant features from datasets to improve the model’s prediction accuracy. The book “Python Feature Engineering” by Soledad Galli teach you the entire process of feature engineering.

The book covers different techniques of missing value imputation, encoding categorical variables, transforming numerical variables, performing variable discretisation, handling outliers, deriving features from dates and time variables. Further, it also covers, feature scaling, applying mathematical computations to features, creating new features using transactional and time series data, and feature extraction from text data.

17. Machine Learning Bookcamp

Once you get the idea of feature engineering, then I would recommend getting familiar with different machine learning algorithms. The book “Machine Learning Bookcamp” will introduce you to the world of intelligent machines and systems using practical real-world projects.

This book will give you an intuitive overview of the concepts with minimal theory and more practical production-ready examples. You will be introduced to a wide variety of ML algorithms. It will introduce you to regression and classification algorithms, (linear regression, logistic regression, decision trees, ensemble learning, and deep learning) using popular ML libraries, such as scikit-learn, and Keras.

18. Feature Selection for Machine Learning

Udemy Course Link: Feature Selection for Machine Learning

The next step after learning feature engineering and ML algorithms will be to identify influential variables. The course “Feature Selection for Machine Learning” will help you select the most important predictive variables for your model from a set of features (if an enormous set of features existed in your dataset), as some of them will be identical or very similar, some of them are not predictive at all.

The course will cover, how to remove features with low variance, identifying redundant features, then feature selection based on statistical tests and selecting features based on change in model performance.

19. A deep understanding of deep learning (with Python intro)

Udemy Course Link: A deep understanding of deep learning (with Python intro)

Next step will be to learn the most popular ML algorithm known as deep learning. To start your journey with deep learning, first you should learn the concepts from scratch. The course “A deep understanding of deep learning (with Python intro)” by Mike X Cohen on Udemy will give you a hands-on experience on the algorithm using the most popular library PyTorch.

The course starts with a refresher short course on python, then proceed to the artificial neural networks. The course will cover gradient descent, ANN, overfitting and cross validation, regularization, activations and optimizers, feedforward networks, measurement of model performance, autoencoders, running models using gpu. In addition, it also covers advanced concepts like Convolutional Neural Networks (CNN), style transfer, generative adversarial networks, Recurrent Neural Networks, and ethics of deep learning.

20. Deep Learning with Python

After learning the deep learning concepts and its implementation using PyTorch. I will recommend learning the low code implementation deep learning library called “Keras”. The book “Deep Learning with Python” written by Francois Chollet, is one of the best books out there that will give you hands-on experience on low code deep learning model implementation using python. Even PyTorch is very popular and learning that will be enough, still I would recommend you to add this library in your knowledge bucket.

21. Hyperparameter Optimization for Machine Learning

Udemy Course Link: Hyperparameter Optimization for Machine Learning

Most of the ML algorithms have a set of hyperparameters. Identifying the set of stable hyperparameters that increases model performance on unknown dataset is the objective for a predictive model. The course “Hyperparameter Optimization for Machine Learning” covers all the techniques required to identify the best set of hyperparameters.

This course will teach you the concept of cross-validation and nested cross-validation for optimization, Grid search and Random search, Bayesian Optimization, Tree-structured Parzen estimators, SMAC, Population-Based Optimization and other SMBO algorithms. It also covers how to implement them with open-source libraries including Hyperopt, Optuna, Scikit-optimize, Keras Turner and others.

22. Machine Learning with Imbalanced Data

Udemy Course Link: Machine Learning with Imbalanced Data

Typically, we use accuracy as evaluation metric but in practice “accuracy” evaluation metric is useful when your data (the outcome variable) is balanced (contains equal proportion of class labels). But in practice, you will mostly encounter an imbalanced data set.

This course “Machine Learning with Imbalanced Data” by Soledad Galli will help you to build a good predictive model with imbalanced data. The course will teach you, different evaluation metrics and its application scenario. It also covers undersampling, oversampling, ensemble methods to handle imbalance datasets, cost sensitive methods which penalize wrong decisions and probability-based calibration.

23. Forecasting Time Series Data with Facebook Prophet

Now you are familiar with the Machine learning algorithms and codes. The next step is to learn Time Series forecasting. The book “Forecasting Time Series Data with Facebook Prophet” will guide you through basic to advanced time series forecasting using one of the wonderful libraries called “prophet” which was open-sourced by Meta.

This book will guide you through various concepts of time-series forecasting and its real-world use cases. It will cover seasonalities (hourly, daily, monthly, yearly, and custom), seasonality modes (additive and multiplicative), holidays (national, state, and custom), different growth modes (linear, logistic and flat), trend change points, adding and analysing regressors, handling outliers and special events, estimating uncertainty intervals, model diagnosis and evaluation, cross-validation, performance metrics and productionizing prophet.

24. Python OOP Course: Master Object-Oriented Programming

Udemy Course Link: Python OOP Course: Master Object-Oriented Programming

Once you are familiar with the details of python, different packages and their modules. You may start writing wonderful custom codes and would like to make it available to millions of Python users though PyPI or Conda. Here, comes the Python’s Object-Oriented Programming part. The course “Python OOP Course: Master Object-Oriented Programming” will help you learn the details of OOP concepts. You may use this knowledge to create your own python packages. It gives you the fundamental knowledge, how the Python scripts, modules, and packages form complex projects like Pandas, Matplotlib, Scikit-learn. This course covers the concept of classes and objects, class attributes vs instance attributes, class methods vs instance methods vs static methods, encapsulation, inheritance and magic methods.

25. Applied Data Science using Pyspark

My next recommendation will be to learn big data handling frameworks like PySpark. The python libraries can handle limited volume of data, which is enough for almost all basic data analysis. But think about big companies, at present, companies (small ones) generate terabytes of data every day. So, to handle say 100 terabytes of data you need something fast, reliable and scalable, where PySpark comes into action.

PySpark is the Python API for using Apache Spark, which is a parallel and distributed engine used to perform big data analytics. In the era of big data, PySpark is extensively used by Python users for performing data analytics on massive datasets and building applications using distributed clusters.

The book “Applied Data Science using Pyspark” will give you hands-on experience on how to perform data analysis on large volume of data. The book teaches you the basics of PySpark, utility functions and visualisation, variable selection, supervised machine learning algorithms, model evaluation, unsupervised ML and recommendation algorithms, machine learning flow and automated pipelines, and deployment of machine learning models.

Note 1: The above books or courses will show you the tools and sometimes show you the path for solving a problem, but it won’t make you a good data analyst or data scientist. The key to become best at data science is to apply and solve real-world projects/problems. This will give you the real taste and illustrate the extent of real-world problems. Solving them with the tools you have learned will make you proficient and extend your problem-solving capabilities, and ultimately makes you good at solving data science problems.

Note 2: I am not promoting any book, course, author, or organization. The recommended list (books and courses) is entirely based on my personal experience.

Note 3: The above list is dynamic. If I encounter a better book or course in the future, then I will add/update it in the existing list.

I hope you learned something new!